The Dawn of Computing: Early Processor Beginnings

The evolution of computer processors represents one of the most remarkable technological journeys in human history. From room-sized machines with limited capabilities to today's microscopic powerhouses, processor development has fundamentally transformed how we live, work, and communicate. The story begins in the 1940s with the first electronic computers that used vacuum tubes as their primary processing components.

These early processors, such as those in the ENIAC computer, contained approximately 17,000 vacuum tubes and occupied an entire room. Despite their massive size, they operated at speeds measured in kilohertz and could perform only basic calculations. The transition from mechanical to electronic processing marked the first major leap in processor evolution, setting the stage for decades of rapid innovation.

The Transistor Revolution

The invention of the transistor in 1947 by Bell Labs scientists John Bardeen, Walter Brattain, and William Shockley represented a watershed moment in processor development. Transistors were smaller, more reliable, and consumed significantly less power than vacuum tubes. By the late 1950s, transistors had largely replaced vacuum tubes in computer designs, enabling more compact and efficient systems.

The IBM 7090, introduced in 1959, exemplified this transition. It used transistors instead of vacuum tubes and became one of the most successful scientific computers of its era. This period also saw the development of early programming languages and operating systems that would eventually shape modern computing architectures.

The Integrated Circuit Era

The 1960s brought another revolutionary advancement: the integrated circuit (IC). Jack Kilby and Robert Noyce independently developed the first working ICs, which allowed multiple transistors to be fabricated on a single silicon chip. This innovation dramatically reduced the size and cost of processors while improving reliability and performance.

Intel's introduction of the 4004 microprocessor in 1971 marked the beginning of the modern processor era. This 4-bit processor contained 2,300 transistors and operated at 740 kHz, representing the first commercially available microprocessor. The 4004 demonstrated that complex processing capabilities could be integrated into a single chip, paving the way for the personal computer revolution.

The x86 Architecture Emerges

Intel's 8086 processor, released in 1978, established the x86 architecture that would dominate personal computing for decades. This 16-bit processor introduced features that became standard in subsequent designs, including segmented memory addressing and enhanced instruction sets. The success of the 8086 led to IBM selecting Intel processors for their first personal computer, cementing x86's position in the market.

Throughout the 1980s, processor manufacturers engaged in fierce competition, with companies like AMD emerging as significant players. The introduction of 32-bit processing with Intel's 80386 in 1985 represented another major milestone, enabling more sophisticated operating systems and applications. This period also saw the rise of reduced instruction set computing (RISC) architectures as an alternative approach to processor design.

The Clock Speed Race and Multicore Revolution

The 1990s witnessed an intense focus on increasing clock speeds, with processors reaching gigahertz frequencies by the decade's end. Intel's Pentium processors became household names, while competitors like AMD's Athlon series pushed performance boundaries. However, physical limitations eventually made单纯 clock speed increases unsustainable due to power consumption and heat generation issues.

The industry responded by shifting toward multicore architectures. Instead of making single cores faster, manufacturers began integrating multiple processing cores on a single chip. This approach allowed for better performance scaling while managing power efficiency. Intel's Core 2 Duo, released in 2006, demonstrated the effectiveness of this strategy and established multicore processing as the new standard.

Specialization and Heterogeneous Computing

Modern processor evolution has embraced specialization to address specific computational needs. Graphics processing units (GPUs) evolved from dedicated graphics cards to general-purpose parallel processors capable of handling complex scientific calculations and artificial intelligence workloads. The integration of GPU capabilities directly into CPUs, as seen in AMD's APU designs, represents another significant trend.

Today's processors also incorporate specialized units for tasks like AI acceleration, cryptography, and media processing. Apple's M-series chips exemplify this approach, combining high-performance CPU cores with specialized accelerators for machine learning and other workloads. This heterogeneous computing model allows for optimized performance across diverse applications.

Current Trends and Future Directions

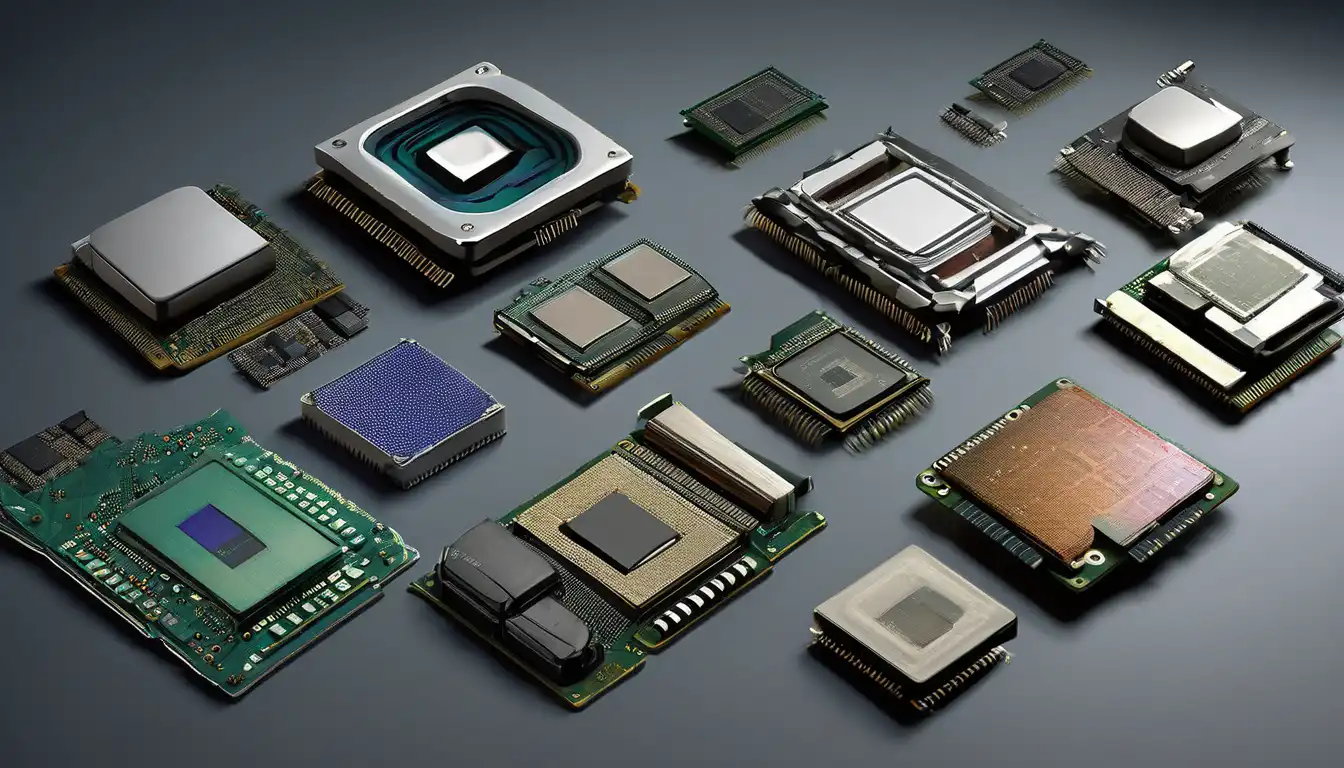

The evolution of computer processors continues at an accelerated pace, driven by emerging technologies and changing computational demands. Chiplet architectures, which combine multiple specialized dies in a single package, represent the latest innovation in processor design. This approach allows manufacturers to mix and match different process technologies and optimize yields while maintaining performance.

Quantum computing represents the next frontier in processor evolution. While still in early stages, quantum processors promise to solve problems that are intractable for classical computers. Major technology companies and research institutions are investing heavily in quantum computing research, suggesting that hybrid classical-quantum systems may become practical within the coming decades.

Sustainability and Energy Efficiency

As processor performance continues to advance, energy efficiency has become a critical concern. Manufacturers are developing increasingly sophisticated power management techniques and exploring new materials like gallium nitride to reduce energy consumption. The shift toward more efficient architectures reflects growing awareness of computing's environmental impact and the need for sustainable technology development.

The evolution of computer processors has transformed nearly every aspect of modern life, from scientific research to everyday communication. As we look toward future developments in areas like neuromorphic computing and advanced AI acceleration, it's clear that processor innovation will continue to drive technological progress for generations to come. The journey from vacuum tubes to quantum computing demonstrates humanity's remarkable capacity for innovation and our relentless pursuit of computational power.